In order to make profitable decisions, we need good information. Whether we base our decisions on sales, customer perceptions or the number of widgets we shipped last month, our information comes from some system that collects and measures relevant data for us.

In my Six Sigma Black Belt class we recently discussed the challenges of developing a meaningful measurement system. As usual, the theory sounds easy – until it hits the road of reality. A very simple class room exercise illustrated that point neatly: our instructor had gone through the effort of individually placing twenty M&M candies into twenty numbered plastic bags and then asked us to “accept” or “reject” each M&M based on three criteria. The criteria were written down and no additional verbal cues were given nor did we have a “master” M&M on which to base our judgment.

We realized very quickly that these criteria were not nearly as clear cut as they appeared to be. For example, one criterion specified that the letter “m” on the candy should be “100% visible.” Sounds clear cut, right? After all, is has a numeric qualifier to help us make our decision! Reality check: have you ever looked at an M&M up close? The next time you do, look for tiny spots where the white ink is thin enough for the underlying color of the candy to bleed through the letter “m.” Question: if the entire outline of the letter “m” appears on the candy but these little flecks of color are bleeding through, does this mean that the “m” is no longer 100% visible?

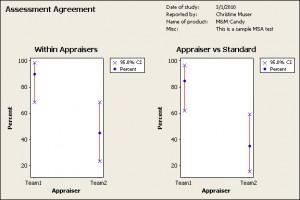

The graph below shows the result of the M&M exercise. It illustrates just how far apart the judgment of perfectly reasonable people can be when they are asked to interpret someone else’s instructions. The left hand graph shows how much each team agreed with itself after reviewing all 20 candies twice in a row. The right hand graph shows how much each team agreed with an external standard for evaluating the candies. The fact that the two red lines barely line up with each other illustrates just how far apart the two teams were with their assessment of the same group of M&Ms.

The real issue, of course, has nothing to do with the candy and how it looks. The bigger point lies in something the Six Sigma folks call “operational definitions” and how we use them. The M&M example illustrates just how unpredictable individual judgments can be and how much training and feedback may be required before team members reach similar conclusions – which, in turn, will allow the team to work toward a common goal.

As the M&M example shows, developing operational definitions can be tricky. Definitions may be less clear cut than we think. We have a limited amount of time in which to develop them. In group settings, we also have to figure in personalities and hidden agendas. Good leadership and negotiation skills are needed to keep everyone focused without suppressing critical input. In the world of sales and marketing we have the additional challenge of dealing with missing and incomplete data. While statistical models go a long way toward filling in the picture, they are difficult to explain and are not always accepted by those whose paycheck depends on them or by those whose experience seems to indicate something else.

Some ideas for dealing with all this will be the subject of future posts. For today I simply want to ask these questions: with so many changes in the health care marketplace, how well are we prepared to make decisions? Which operational definitions do we need to add, update or toss out in order to ensure good decisions for the future?

P.S.: Additional Information About The M&M Graph

This data mimics the results from a Measuring System Audit (MSA) project with M&M candies. The assignment was to inspect 20 pieces of candy and to determine whether each met these three criteria:

1: the letter ‘m’ is 100% visible

2: the ink for the letter ‘m’ is not smudged

3: there are no chips

Only these written criteria were given. Neither team received additional instructions nor a “Master” against which to evaluate the candy. Each team was asked to review the candies in two rounds. During the first round, Team 2 decided to fail all 20 pieces of candy, hence that team’s low rate of agreement.

Conclusion: gaining agreement about operational definitions is critical. Make sure that everyone has the same training and verify that everyone in a decision making role can reach decisions that support the established goal. Repeat training and offer opportunities for feedback & refinement of criteria.